Abstract

In this paper, we introduce a new challenge for synthesizing novel view images in practical environments with limited input multi-view images and varying lighting conditions. Neural radiance fields (NeRF), one of the pioneering works for this task, demand an extensive set of multi-view images taken under constrained illumination, which is often unattainable in real-world settings. While some previous works have managed to synthesize novel views given images with different illumination, their performance still relies on a substantial number of input multi-view images. To address this problem, we suggest ExtremeNeRF, which utilizes multi-view albedo consistency, supported by geometric alignment. Specifically, we extract intrinsic image components that should be illumination-invariant across different views, enabling direct appearance comparison between the input and novel view under unconstrained illumination. We offer thorough experimental results for task evaluation, employing the newly created NeRF Extreme benchmark—the first in-the-wild benchmark for novel view synthesis under multiple viewing directions and varying illuminations.

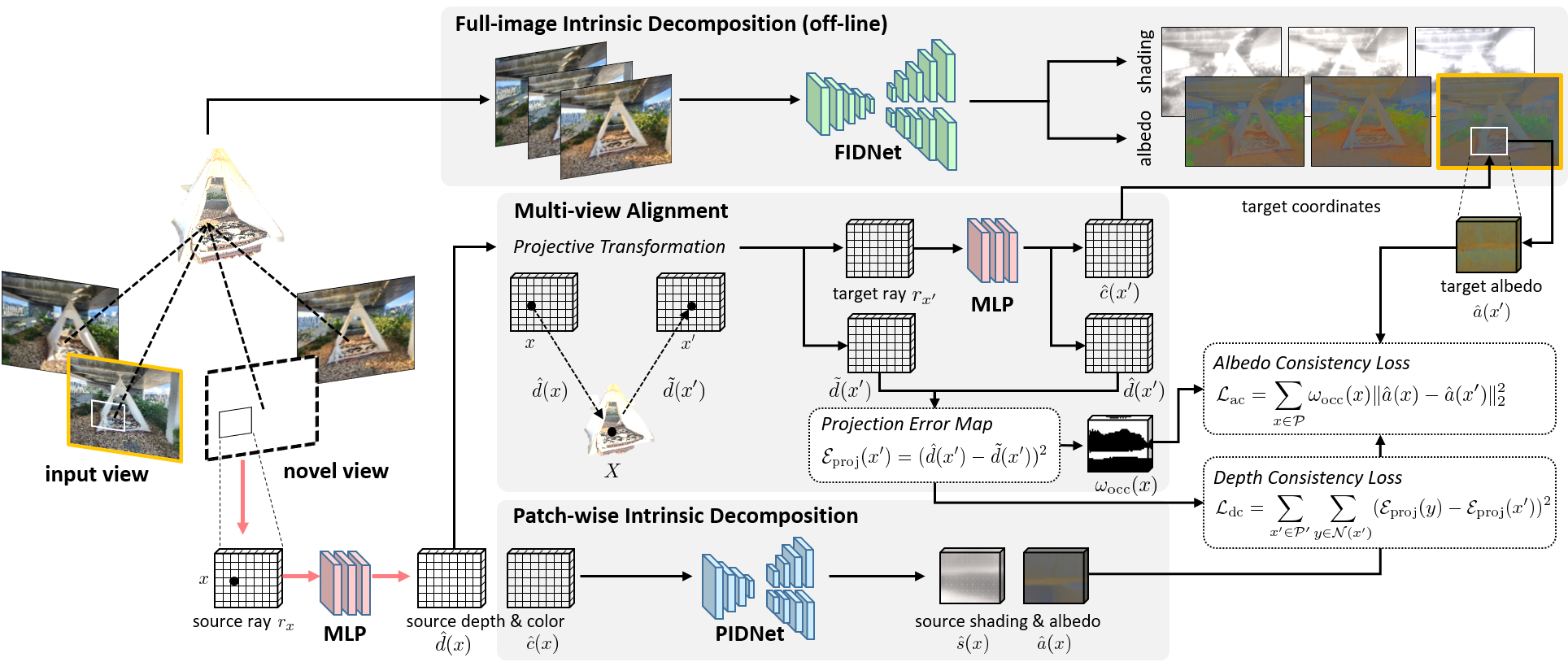

Overall Architecture

The objective of this work is to build an illumination-robust few-shot view synthesis framework by regularizing albedo that should be identical across multi-view images regardless of illumination. Our major challenges are to 1) achieve reliable geometry alignment between different views and 2) decompose the albedo of a rendered view without extensive computational costs.

Instead of directly addressing NeRF-based intrinsic decomposition, we integrate a pre-existing intrinsic decomposition network with NeRF optimization. Our approach involves a few-shot view synthesis framework that employs an offline intrinsic decomposition network, offering global context-aware pseudo-albedo ground truth without the computational overhead. As illustrated in the figure below, FIDNet provides pseudo-albedo ground truths for the input images before the start of the training, guiding PIDNet to extract intrinsic components for novel synthesized views based on these pseudo truths and multi-view correspondences. This allows our NeRF to learn illumination-robust few-shot view synthesis through cross-view albedo consistency. Subsequent subsections detail each framework component.

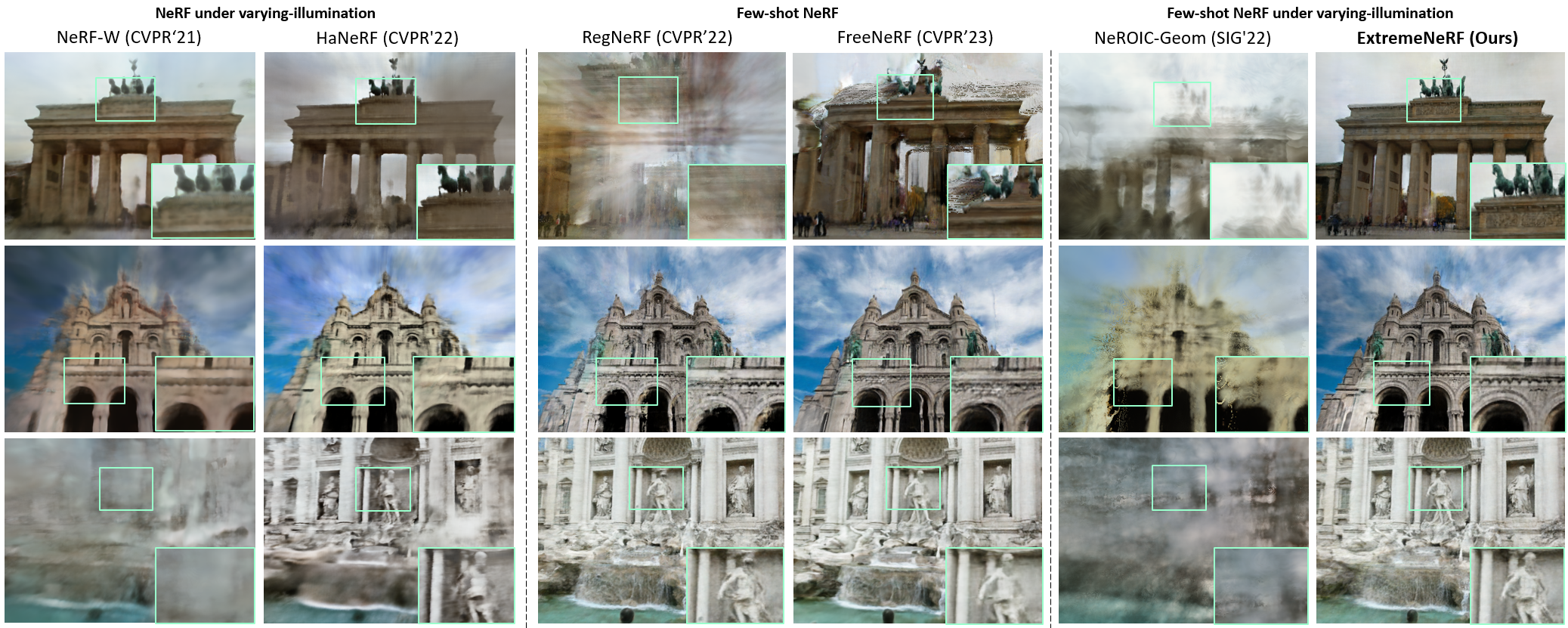

Experimental Results

NeRF Extreme

A synthesized novel view and corresponding depth map are generated by the baselines and our proposed method with 3 view input images. Our proposed method shows plausible synthesis results compared to the other baseline methods.

Phototourism F^3

Given light-varying 3 view input images, our proposed method shows reliable synthesis qualities both for the color and the depth maps, while other baselines show floating artifacts and ill-synthesized depths.

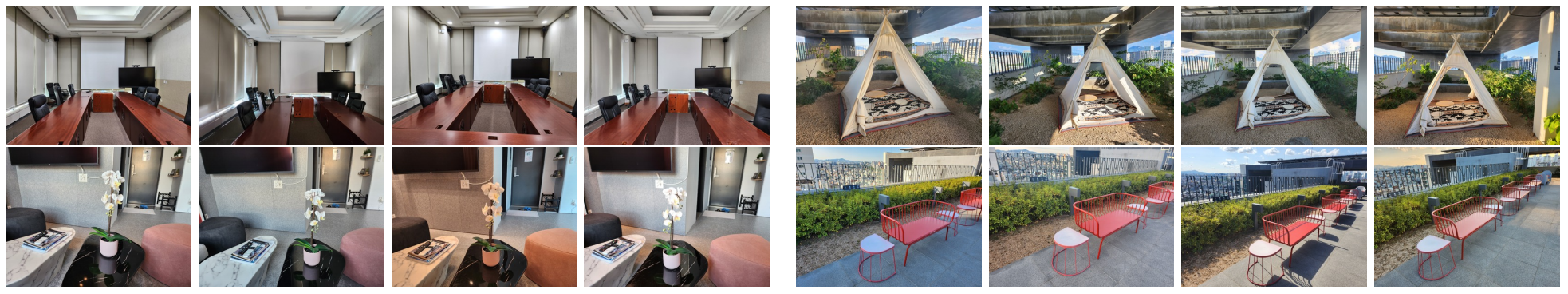

Multi-view / Multi-illumination Dataset

NeRF Extreme

To build a view synthesis benchmark that fully reflects unconstrained environments such as mobile phone images captured under casual conditions, we collected multi-view images with a variety of light sources such as multiple light bulbs and the sun.

Our dataset consists of 10 scenes, 6 of which are indoor and 4 are outdoor. We took 40 images per scene with a resolution of 3, 000 × 4, 000, with 30 images in the train set and 10 images in the test set. The training images of each scenes are captured with at least 3 different lighting conditions. To make the dataset more widely useful, we captured the test scenes under mild lighting conditions, rather than extremely low or high contrasts or intensities, similar to images in typical NeRF benchmark datasets.

BibTeX

@misc{lee2023fewshot,

title={Few-shot Neural Radiance Fields Under Unconstrained Illumination},

author={SeokYeong Lee and JunYong Choi and Seungryong Kim and Ig-Jae Kim and Junghyun Cho},

year={2023},

eprint={2303.11728},

archivePrefix={arXiv},

primaryClass={cs.CV}

}